Blog

News, announcements, and insights from the Ultravox team

Feb 2, 2026

Why speech-to-speech is the future for AI voice agents: Unpacking the AIEWF Eval

Speech-to-speech is the future of voice AI–and as shown in the newly-released AIEWF eval, Ultravox’s speech-native model can outperform both frontier speech models and text models alike.

One of the ways we regularly test the intelligence of voice AI models is with standardized benchmarks, which test the model’s ability to perform tasks like transcribing audio, solve logic puzzles, or otherwise demonstrate reasoning based on spoken prompts.

But modern voice agents need to do more than just understand speech–they need to reliably follow instructions, participate in extended multi-turn conversations, perform function calls, and reference information from a knowledge base or RAG in responses.

Kwindla Kramer and the team at Daily have taken a new approach to creating a model evaluation framework that tests the capabilities that matter for production voice agents, beyond basic speech understanding. The AIEWF eval considers many of the practical requirements not tested in traditional speech understanding benchmarks–knowledge base use, tool calling, instruction following, and performance across a multi-turn conversation.

Traditional speech understanding evals like Big Bench Audio help us understand how accurately a model understands speech. The new AIEWF eval measures how well a model can reason in speech mode and complete tasks that real-world voice agents need to do.

Taken together, these evals demonstrate why speech-to-speech architectures are poised to overtake the component model for voice AI use cases.

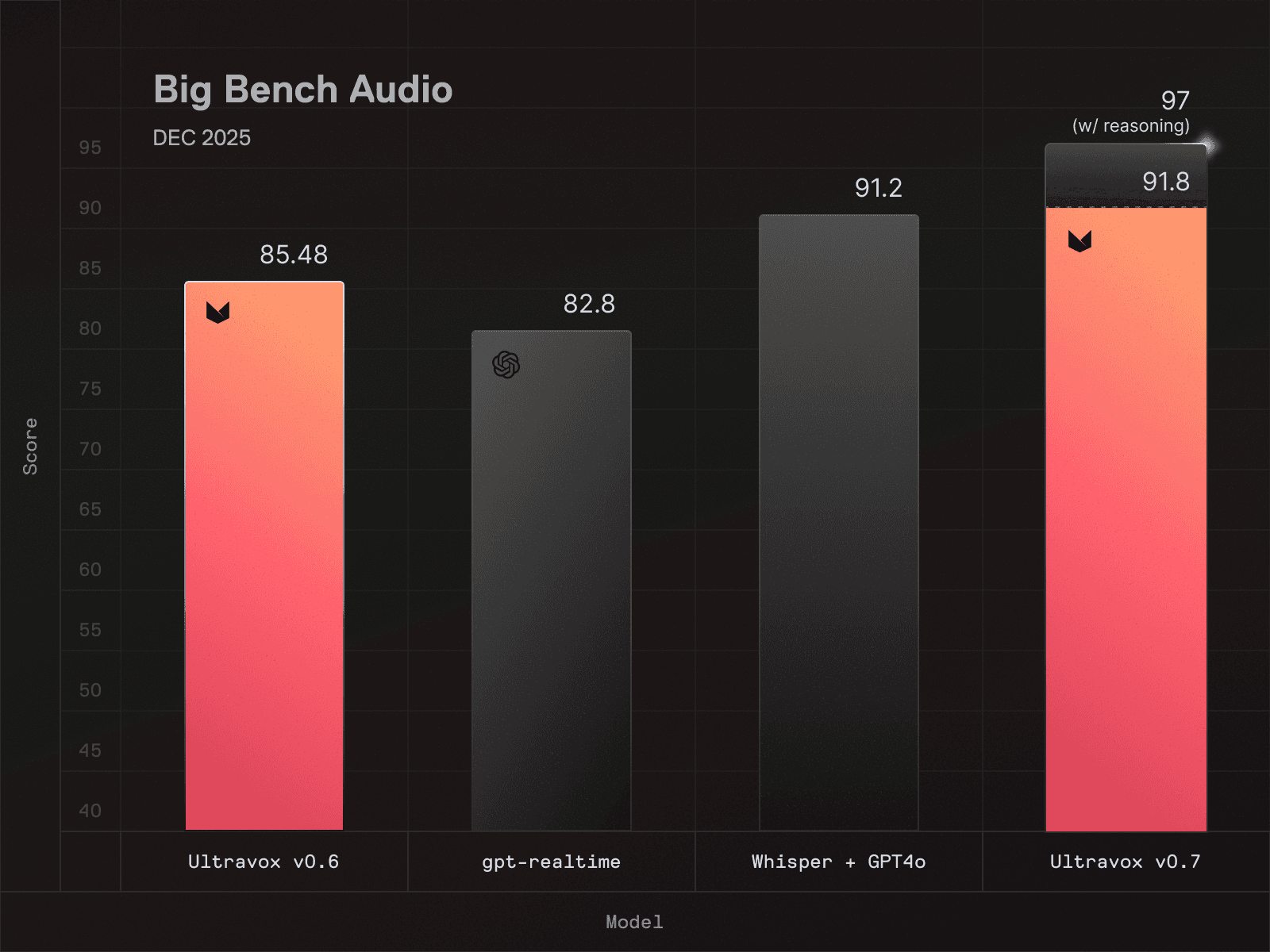

Big Bench Audio: Evaluating speech understanding

Big Bench Audio is a widely-used evaluation framework that tests a model’s speech understanding, logical reasoning, and ability to process complex audio inputs accurately across multiple modes, including speech-to-speech and speech-to-text output. It’s a useful framework for quantifying a model’s inference capabilities given complex audio input.

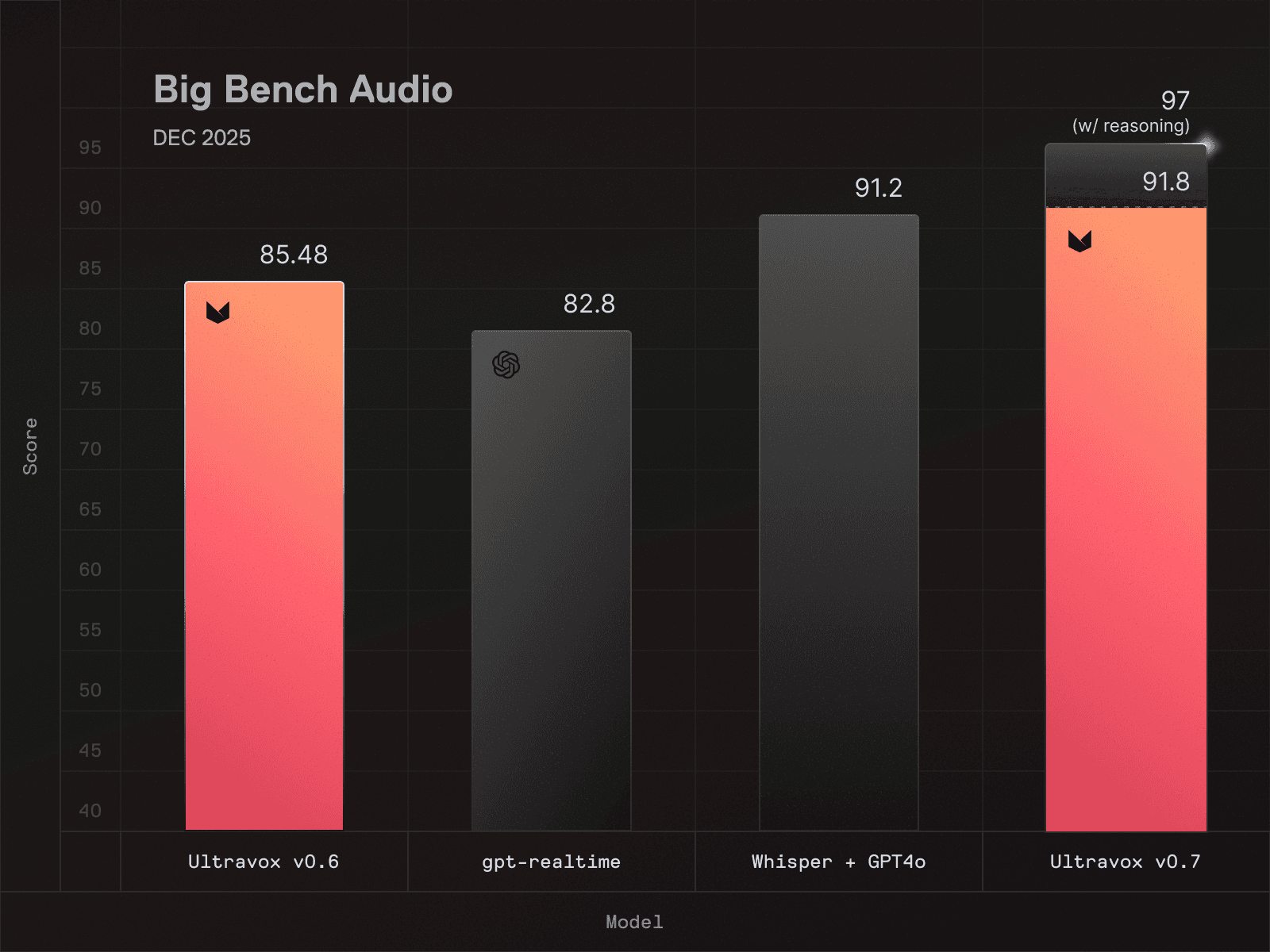

High scores indicate a model that effectively understands spoken language, which requires deep investment in architecture, training, and engineering (For the record, Ultravox v0.7 scores a 91.8 on Big Bench Audio–the highest score of any speech model available at time of writing.)

Naturally our team is excited about our achievement, but we think it’s worth being transparent about the limitations of the test itself.

Big Bench Audio measures model capability in a narrow, relatively isolated context. Here’s an example question from the Big Bench Audio eval for speech-to-speech models (transcribed from original audio sample):

Question: Osvaldo lies. Phoebe says Osvaldo lies. Candy says Phoebe tells the truth. Krista says Candy tells the truth. Delbert says Krista lies. Does Delbert tell the truth? Answer the question.

A model that can correctly answer this question (Delbert does not tell the truth) demonstrates its ability to understand spoken audio as well as its ability to reason logically about speech, both of which are relevant if you’re building a voice agent.

However, Big Bench Audio alone doesn’t tell you much about how well a model handles messy, real-time, multi-step workflows that voice agents typically face in the real world. There’s no tool calling, no complex instruction following, no background noise or interruptions. The eval is structured as a series of questions and answers that are evaluated independently, rather than as a multi-turn conversation.

In order to more accurately understand how well a speech model will perform in the real world, a test needs to measure how the model handles real-world demands. This is where things get interesting.

The AIEWF eval goes beyond speech understanding, and considers performance across:

Tool usage: Can the model correctly invoke external functions and APIs?

Knowledge base integration: Can it retrieve and synthesize information from connected data sources?

Multi-turn conversations: Can it maintain context and coherence across extended interactions?

Task completion: Can it actually accomplish what users ask it to do?

These factors reflect many of the exact workflows that developers are building when they create voice agents for customer service, scheduling, information retrieval, and countless other applications. Instead of single-turn questions and answers, this framework tests reasoning across a set of 30-turn conversations, evaluating performance in individual conversations as well as the model’s consistency from one conversation to the next.

Metric | Ultravox v0.7 | GPT Realtime | Gemini Live |

|---|---|---|---|

Overall accuracy | 97.7% | 86.7% | 86.0% |

Tool use success (out of 300) | 293 | 271 | 258 |

Instruction following (out of 300) | 294 | 260 | 261 |

Knowledge grounding (out of 300) | 298 | 300 | 293 |

Turn reliability (out of 300) | 300 | 296 | 278 |

Median response latency | 0.864s | 1.536s | 2.624s |

Max response latency | 1.888s | 4.672s | 30s |

When measured using the AIEWF multi-turn eval, Ultravox dominated the rankings for speech-to-speech models–in fact, it wasn’t even close.

While a few frontier language models were able to outperform Ultravox on these practical benchmarks, it was with a pretty significant caveat: those models are far too slow for real-time voice applications. When it comes to voice agents, low latency is table stakes, not just a nice-to-have; a 2-second pause while waiting for the model to “think” about its answer can undermine the entire conversational experience.

In short, Ultravox delivered frontier-adjacent intelligence at speeds suitable for real-time voice, delivering an overall experience that many developers might not have realized was possible.

Why speech-to-speech beats component stacks

For years, the standard approach to voice AI was building a component stack: a speech-to-text model feeds a language model, which feeds a text-to-speech model. Each component is built and optimized separately before being assembled into a pipeline.

This approach works(ish) for deterministic use cases, but it has fundamental limitations:

Latency accumulates: Every component in the pipeline adds processing time. By the time you've transcribed, reasoned, and synthesized, you've burned through your latency budget.

Errors compound: Transcription mistakes become reasoning mistakes, reasoning mistakes become response mistakes. There's no graceful recovery.

Context gets lost: The prosody, emotion, and nuance in speech disappear the moment you convert to text. The language model never sees them.

Ultravox’s speech-to-speech architecture sidesteps all of these issues, because incoming audio never goes through an initial transcription step; instead, the model performs reasoning directly on the original speech signal. The result is lower latency, fewer errors, and responses that actually reflect what the user said—not just the words, but the meaning.

The Bottom Line

Traditional benchmarks are a useful signal of a model's fundamental ability to understand speech. But if you're building voice agents, it’s important to bear in mind that traditional benchmarks only tell part of the story.

If you're building voice agents, you need a model that excels at both: strong foundational capabilities *and* practical task performance. Ultravox delivers on both fronts. Highest scores on Big Bench Audio. Top speech model on real-world voice agent tasks. Fast enough for production use.

If you're evaluating models for your next voice agent project, the choice is clear. Ultravox isn't just competitive—it's the best all-around solution available today.

Ready to build with Ultravox? Get started here or contact our team to discuss your voice agent project.

Jan 21, 2026

Inworld TTS 1.5 Voices Are Now Available in Ultravox Realtime

There’s no such thing as “one-size-fits-all” when it comes to voice agents. Accent, tone, and expressiveness can make or break the end user experience, but the “right” choice depends entirely on the product you’re building. That’s why we’re excited to announce that Ultravox Realtime now allows users to build natural, conversational voice agent experiences using pre-built and custom voices using Inworld TTS 1.5.

Emotionally expressive voices are the perfect complement to Ultravox’s best-in-class conversational voice AI, which is why we’ve teamed up with our friends at Inworld. Agents can now be configured to use any of Inworld’s pre-built voices in 15 languages; existing Inworld users who have created custom voice clones can also opt to assign a cloned voice to an Ultravox agent.

Try Inworld voices on Ultravox

To get started, simply log in to your existing Ultravox account (or create a new account).

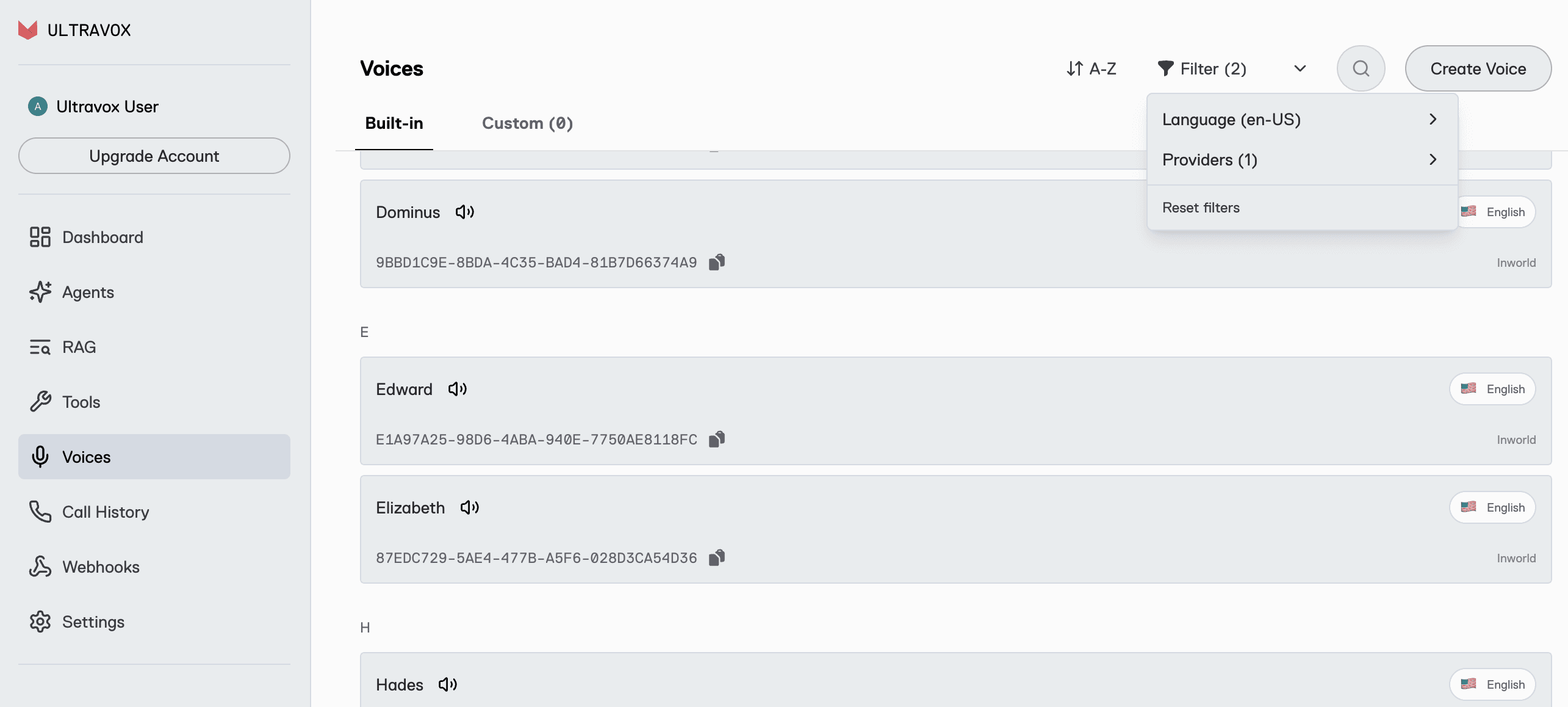

You can browse a list of all available Inworld voices and listen to sample audio by navigating to the Voices screen, where you can filter the list of available voices by language, provider, or both.

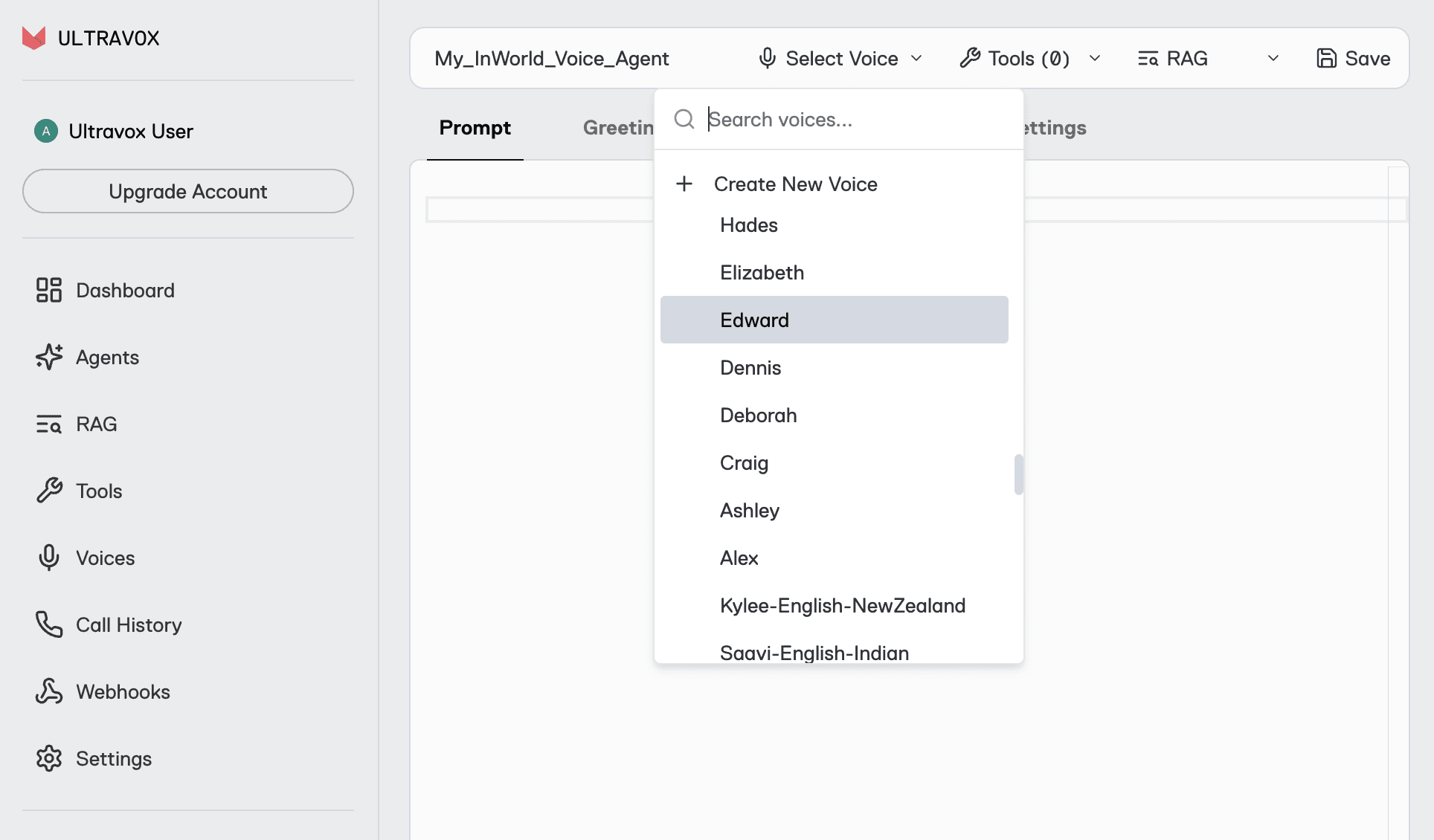

Once you’ve settled on a voice you like, you can either add it to an existing agent or create a new agent and select your preferred voice in the Agents screen.

Earn up to $100 in Ultravox Credits

To celebrate the launch of Inworld TTS 1.5, we’re giving away up to $100 in promotional credits for all our customers who run production voice agents with Inworld voices.

How to qualify

Use Inworld voices for at least 60 call minutes during the promotion period: January 21 - February 28, 2026

Submit the usage survey form before March 13, 2026

Retain (do not delete) any eligible call records before April 1, 2026

For every 60 minutes of call time using Inworld voices during the promotional period, you’ll earn $1.00 in Ultravox account credits – up to $100!

All Ultravox customers (new and existing) on any paid or pay-as-you-go plan are eligible to participate, and there’s no cap on the number of customers who can take part in this promotion.

For more information and FAQ, please visit this page.

In our mission to deliver the best all-around conversational experience, Ultravox trained the world’s most advanced speech understanding model, capable of understanding real-world speech with no text transcription required. And Ultravox outperforms other speech-to-speech models in benchmarks that account for real-world requirements: accurate tool calling, reliable instruction following, and consistent low latency. If you haven’t given Ultravox a try yet, you can create your account here.

Jan 16, 2026

Introducing the Ultravox Integration for Pipecat

Ultravox Realtime is now available as a speech-to-speech service in Pipecat. Use the deployment stack you’re used to with a model that accepts no compromise.

If you've built voice agents with Pipecat previously, you've faced a fundamental trade-off.

Speech-to-speech models like GPT Realtime and Gemini Live process audio directly, preserving tone and nuance while delivering fast responses. But when your agent needs to follow complex instructions, call tools reliably, or work with a knowledge base, these models often fall short. You get speed and native audio understanding, but at the cost of reliability.

Cascaded pipelines chain together best-in-class STT, LLM, and TTS services to get the full reasoning power of models like Claude Sonnet or GPT-5. But every hop adds latency, and the transcription step loses the richness of spoken language. You get better model intelligence, but sacrifice speed and naturalness.

Ultravox changes the equation

Like other speech-to-speech models, the Ultravox model is trained to understand audio natively, meaning incoming signal doesn’t have to be transcribed to text for inference. But unlike other models, Ultravox can match or exceed the intelligence of cascaded pipelines, meaning you no longer need to choose between conversational experience and model intelligence.

You don’t need to take our word for it–in an independent benchmark built by the Pipecat team, Ultravox v0.7 outperformed every other speech-to-speech model tested:

Metric | Ultravox v0.7 | GPT Realtime | Gemini Live |

|---|---|---|---|

Overall accuracy | 97.7% | 86.7% | 86.0% |

Tool use success (out of 300) | 293 | 271 | 258 |

Instruction following (out of 300) | 294 | 260 | 261 |

Knowledge grounding (out of 300) | 298 | 300 | 293 |

Turn reliability (out of 300) | 300 | 296 | 278 |

Median response latency | 0.864s | 1.536s | 2.624s |

Max response latency | 1.888s | 4.672s | 30s |

The benchmark reflects real-world conditions and needs, evaluating model performance in multi-turn conversations and considering tool use, instruction following, and knowledge retrieval. These results placed Ultravox ahead of GPT Realtime, Gemini Live, Nova Sonic, and Grok Realtime in head-to-head comparisons using identical test scenarios. Ultravox’s accuracy is on par with traditional text-only models like GPT-5 and Claude Sonnet 4.5, despite returning audio faster than those text models can produce text responses (which, for voice-based use cases, would still require a TTS step to produce audio output).

What this means for your Pipecat application

If you're using a speech-to-speech model today, switching to Ultravox will give you significantly better accuracy on complex tasks (tool calls that actually work, instructions that stick across turns, knowledge retrieval you can rely on) without giving up the low latency and native speech understanding you need.

If you're using a cascaded pipeline, you can switch to Ultravox and unlock the benefits of direct speech processing (faster responses, no lossy transcription, preserved vocal nuance) without sacrificing intelligence.

In either case, our new integration is designed to slot into your existing Pipecat application with minimal friction.

For users with existing speech-to-speech pipelines, the new Ultravox integration should work as a drop-in replacement.

For applications currently built using cascaded pipelines, you’ll replace your current STT, LLM, and TTS services with a single Ultravox service that handles the complete speech-to-speech flow.

Get started today

If you're already running voice agents in production, this is the upgrade path you've been waiting for. If you're just getting started with voice AI, there's never been a better time to build.

Check out this example to see how Ultravox works in Pipecat, then visit https://app.ultravox.ai to create an account and get your API key–no credit card required.

Dec 23, 2025

Thank You for an Incredible 2025

As 2025 winds down, we wanted to say, "thank you." Thank you for building with Ultravox, for pushing us to be better, and for being part of what has been, frankly, a mind-blowing year.

2025 by the Numbers

When we look back at where we started at the beginning of the year, the growth is hard to believe:

18x → How much larger our customer base is now than at the end of last year

38x → How much larger our busiest day was in 2025 vs. 2024

235 → The number of best-ever days on the platform in 2025

None of this happens without you. Every integration you've built, every voice agent you've deployed, every piece of feedback you've shared - it all adds up. You're not just using Ultravox, you're shaping it.

What's New

Before the holiday break, we wanted to get a few things into the hands of our users:

React Native SDK

Many of you asked for it, and it's here. Build native mobile voice experiences with Ultravox:

Source code and example app available in the repo.

Client SDK Improvement

Based on your feedback, we've made a change to how calls start: calls now begin immediately after you join them. No more waiting for end-user mic permission.

Why this matters: Inactivity messages now work as you'd expect. If an end user never starts speaking, the inactivity timeout can end the call automatically. This keeps those errant calls to a minimum and saves you money.

Call Transfers with SIP

Your agents can now transfer calls to human operators with built-in support:

coldTransfer— Immediately hand the call to a human operator. No context, instant handoff. Docs →warmTransfer— Hand off to a human operator with context about the call, so they're prepped and not going in cold. Docs →

Full guide: SIP Call Transfers →

Here's to What You'll Build Next & A BIG 2026

Voice AI is hitting an inflection point. The technology is finally good enough (fast enough, natural enough, affordable enough) to power experiences that weren't possible even a year ago.

Our 0.7 model release has been benchmarked against every major competitor (Gemini, OpenAI, Amazon Nova) and Ultravox leads in both speed and quality. When you factor in our $0.05/minute pricing, the choice becomes pretty obvious.

Thank you for being a customer. Thank you for believing in what we're building. And thank you for an unforgettable year. We have been hard at work on some new capabilities we can’t wait to get in your hands! Stay tuned for lots more to come in 2026!

Happy holidays from all of us at Ultravox.

Dec 4, 2025

Introducing Ultravox v0.7, the world’s smartest speech understanding model

Today we’re excited to release the newest version of the Ultravox speech model, Ultravox v0.7. Trained for fast-paced, real-world conversation, this is the smartest, most capable speech model that we’ve ever built. It’s the leading speech model available today, capable of understanding real-world speech (background noise and all!) without the need for a separate transcription process.

Since releasing and sharing the first version of the Ultravox model over one year ago, thousands of businesses from around the world have built and scaled real-time voice AI agents on top of Ultravox. Whether it’s for customer service, lead qualification, or just long-form conversation, we heard the same thing from everyone: you loved the speed and conversational experience of Ultravox, but you needed better instruction following and more reliable tool calling.

We’re proud to share that Ultravox v0.7 delivers on both of those without sacrificing the speed, performance, and conversational experience that users love (in fact, we improved inference performance by about 20% when running on our dedicated infrastructure).

One of the most important changes in v0.7 is our move to a new LLM backbone. We ran a comprehensive evaluation of the best open-weight models and ended up choosing GLM 4.6 to serve as our new backbone. Composed of 355B parameters and 160 experts per layer, it substantially outperforms Llama 3.3 70B across instruction following and tool calling. As always, the model weights are available on HuggingFace.

We’re also keeping the cost of using v0.7 on Ultravox Realtime (our platform for building and scaling voice AI agents) the same at $.05/min. That’s state-of-the-art model performance with speech understanding and speech generation (including ElevenLabs and Cartesia voices) for a price that is half the cost of most providers.

You can get started with v0.7 today either through the API or through the web interface.

Frontier Speech Understanding

Ultravox v0.7 is state-of-the-art on Big Bench Audio, scoring 91.8% without reasoning and an industry-leading 97% with thinking enabled.

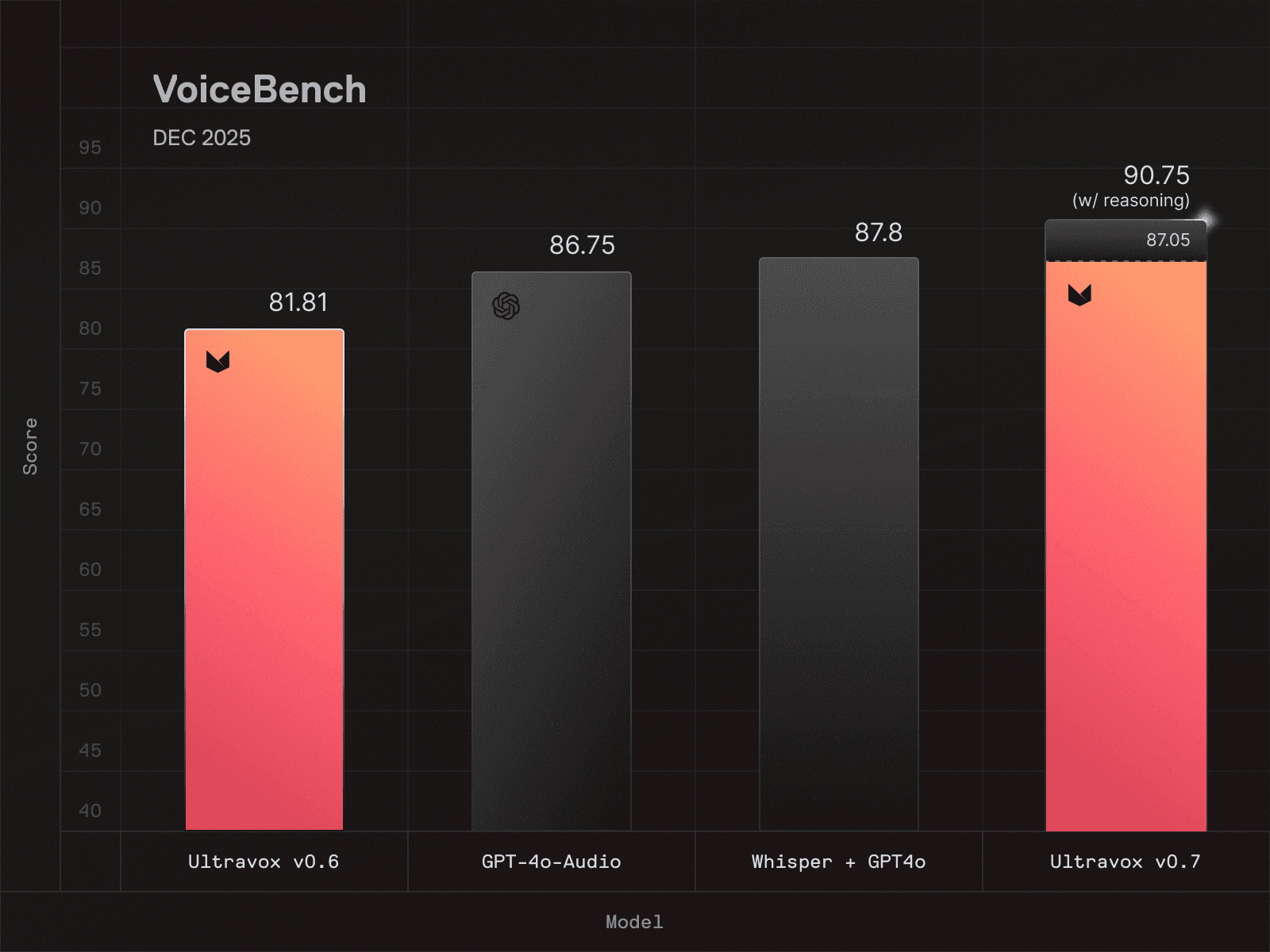

On VoiceBench, v0.7 (without reasoning) ranks first among all speech models. When reasoning is enabled, it extends its lead even further, outperforming both end-to-end models and ASR+LLM component stacks.

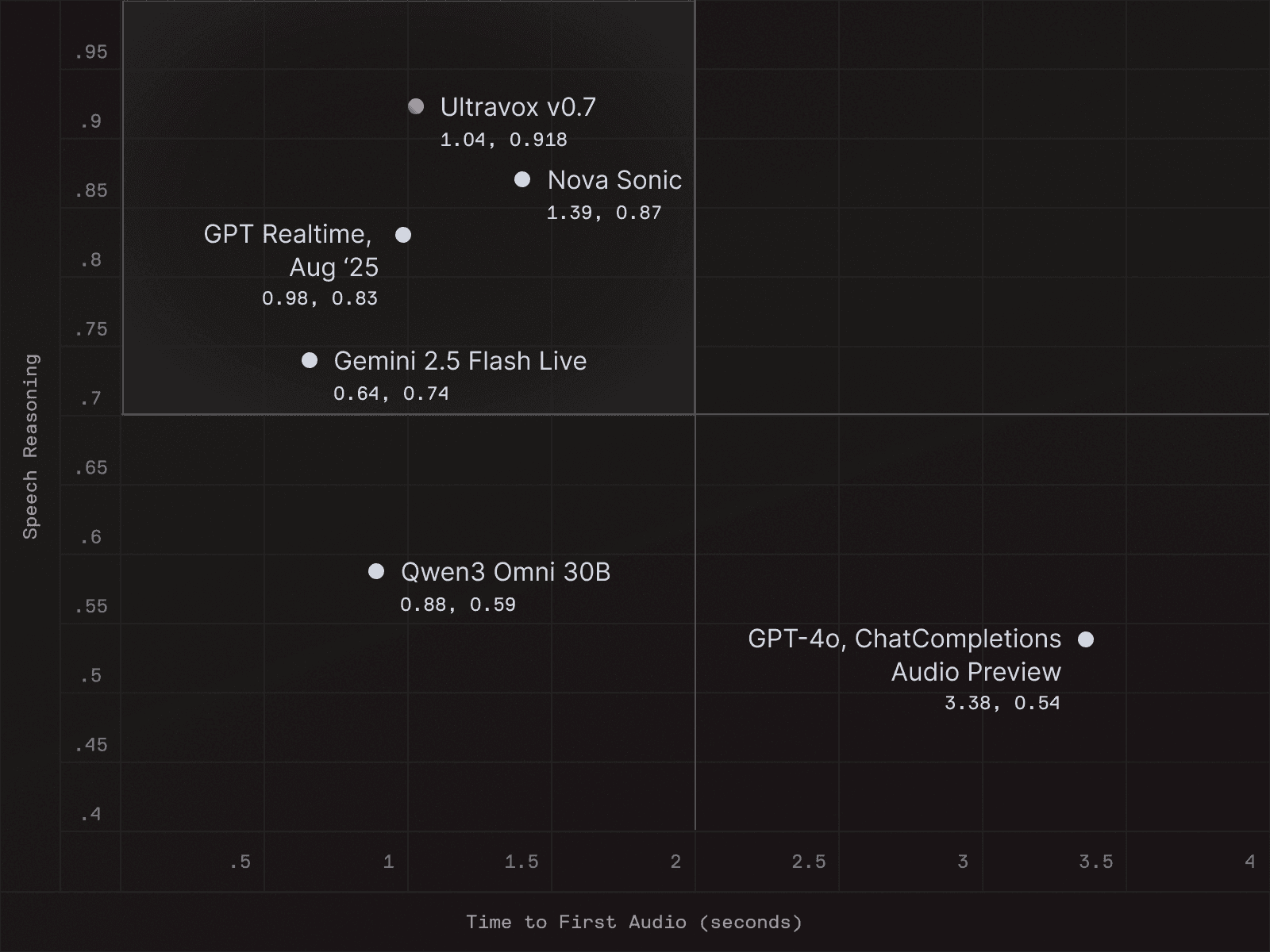

When measured for speed, the Ultravox model running on our dedicated infrastructure stack for Ultravox Realtime achieves speech-to-speech performance that is on-par or better than equivalent systems:

Numbers taken from Artificial Analysis' Speech-to-Speech "Speech reasoning vs. Speed" [source]

Ultravox Realtime Platform Changes

Ultravox v0.7 will become the new default model on Ultravox Realtime starting December 22nd, but you can start using it today by selecting ultravox-v0.7 in the web UI or setting the model param explicitly when creating a call via the API.

This default model update will affect all agents using fixie-ai/ultravox as well as agents with unspecified model strings. If you plan to migrate to this model version, we recommend starting testing as soon as possible, as you may need to adjust your prompts to get the best possible experience with your agent after the model transition.

While we think Ultravox v0.7 is the best-in-class option, we’ll continue to make alternate models available for users who need them. So if you’re happy with your agent’s performance using Ultravox v0.6 (powered by Llama 3.3 70B) and don’t plan to change, or if you just need more time for testing before migrating to Ultravox v0.7, you’ll be able to decide when (or if) your agent starts running on the latest model.

You can continue using the Llama version of Ultravox (ultravox-v0.6), but you’ll need to manually set the model string to continue using it. If your agent is already configured to use fixie-ai/ultravox-llama3.3-70b or ultravox-v0.6, then no changes are required–your agent will continue to use the legacy model after December 22.

We’ll also be deprecating support for Qwen3 due to a combination of low usage and sub-optimal performance (GLM 4.6 outperforms Qwen3 across all our tested benchmarks). Users will need to migrate calls and/or agents to another model by December 21 in order to avoid an interruption in service. Gemma3 will continue to operate as it does currently.

Oct 24, 2025

Ultravox Answers: How does zero-shot voice cloning work?

Q: I followed the instructions and cloned my voice to use with my AI agent, but my voice clone doesn’t really sound like me–why isn’t it working?

We’ve encountered this type of user question often enough that we thought it was worthwhile to do a deep dive into how voice cloning works.

The short answer is that there are lots of reasons why your voice clone might sound noticeably different from your actual voice–some of these are easy to fix, while others are due to more structural nuances of how voice cloning and text-to-speech models work.

The basics of voice cloning

Humans learn language through a complex process, first by hearing and recognizing word sounds, then linking words to real-world concepts, and eventually grasping more abstract concepts like the pluperfect tense. So a four-year-old might stumble while pronouncing “apple” or “toothbrush” but will generally recognize that those words correspond to physical objects in the world around them.

Training a text-to-speech (TTS) model is wildly different. Although modern models increasingly incorporate semantic information, their understanding of individual words or sentences remains limited, unlike human comprehension. Instead, models learn the mappings from text sequences to audio waveforms via the patterns of some intermediate representation, such as spectrograms or quantized speech tokens. From there, the model can reproduce patterns that sound like human speech. A TTS model can accurately pronounce the word “toothbrush”, but without any human-like understanding of the object that word represents.

An example of a derived spectrogram, waveform, and pitch contour based on an adult male speaking voice.

Training a TTS model relies on aligned text and audio pairs: give the model a text snippet, and a corresponding audio clip of a human reading the text, and then repeat the process a few million times. Eventually, the model output for a given text sample will sound convincingly like a human reading the text aloud.

The quality and fidelity of speech generation in these models varies between languages because there is wide variety in the availability of text-audio samples for different languages and dialects. Training data for languages like English, Spanish, and Mandarin is extensive and widely available, while Farsi, Yoruba, and Khmer are considered low-resourced languages, meaning they have little in the way of paired text-audio data to train on.

Many indigenous and endangered languages have no standardized TTS datasets at all. If you speak one or more low-resourced languages, please consider contributing to Mozilla’s Common Voice project by recording, verifying, or transcribing audio samples.

Once the model has been trained on a sufficiently large and diverse dataset, it can synthesize the voice of a new human speaker from a remarkably brief sample–typically 10 to 60 seconds of audio, depending on the model’s training–without any additional fine-tuning or adaptation. This is accomplished through a process called zero-shot voice cloning.

In zero-shot voice cloning, the model analyzes a short reference clip to capture a speaker’s vocal traits–qualities like timbre, pitch, and nasality–and encodes these traits as explicit acoustic features or latent embeddings. This encoding can then guide speech generation, so the model can produce new utterances in the same voice from text alone. Some modern TTS models use the reference audio sample as a prompt prefix, but there’s no speaker-specific fine tuning or training involved.

The advantage of the zero-shot method is that it’s easily accessible–almost anyone can record a 60-second audio clip, and because there’s no fine-tuning of the generated speech beyond the initial embedding, it’s quick to do. The cloned voice won’t be a perfect match (due to the absence of fine tuning as well as for some reasons we’ll discuss below), but if what you need is a reasonably good replica of a speaker’s voice, the zero-shot method is a great option.

Limitations of the zero-shot voice cloning method

There are some aspects of human speech that even the best TTS models will struggle to replicate using the zero-shot method.

Prosody

Prosody refers to the melody and rhythm of speech; patterns like pitch, timing, and emphasis that make a speaker sound natural and expressive. While a model can often capture the basic qualities of a person’s voice, such as pitch and timbre, it’s much harder to consistently reproduce their prosody. Most speakers will share some general intonation patterns, like raising pitch to indicate a question, but individual speakers will also develop unique habits of pacing, stress, and tone that vary depending on mood and context. Depending on how the text prompt is written (for example, using punctuation marks to denote pauses or capitalization to mark emphasis) generated speech might sound like the right voice, but with the wrong delivery.

Local and complex accents

Even for languages with extensive training data available, specific local or regional accents might be under-represented. For example, French and English are both among the most high-resource languages for TTS, but Belgian French or Scottish English might appear far less frequently in training data. The challenge is even greater for regions with extensive bilingualism (like the largely German-Italian bilingual population of the South Tyrol region), as speakers will naturally mix features like phonetic and rhythmic patterns from multiple languages.

Expressiveness and emotion

For obvious reasons, a 60-second audio sample will generally not capture the full range of a speaker’s emotions (excited, sad, sarcastic, defeated) for the model to reference. While most models can reproduce emotional styles that they’ve been trained on, their ability to model a speaker’s expressiveness is limited if emotion and speaker identity are not clearly disentangled. In some cases, the cloned voice might inherit the emotional tone present in the reference sample, or simply default to an emotionally neutral delivery.

There’s one additional factor that might explain why your voice clone sounds “off”, although it’s not unique to voice cloning. When you speak, you usually hear your voice through two pathways: sound waves traveling through the air into your ears (air conduction), and vibrations traveling directly through your skull to your inner ear (bone conduction).

Solid materials like bone conduct lower frequencies more efficiently than air, so bone conduction essentially gives you an internal bass boost, making your voice sound richer and deeper when you hear yourself speak.

Audio recording, however, captures only the air-conducted sound. When you play it back, you’re missing that extra resonance from bone conduction, so your voice might sound “thinner” and higher-pitched than what you’re used to. Your brain is likely used to the blended version, but audio recordings only give you the external version that everyone else hears.

Since a voice clone embedding is also generated using only air-conducted sound from an audio sample, it might be a pretty close match to the way other people hear your speaking voice, even if it sounds a bit odd compared to the way you’re accustomed to hearing yourself.

Tips on making a better voice clone

While generated speech might not be a perfect replica of your voice, there are some steps you can take when producing an audio sample that will improve the overall quality of your voice clone:

Make sure your recording is free of background noise, especially sound from other voices, music, etc. You should be the only speaker audible on the sample.

The acoustics of the room you record in also matter–if you make your recording in a large, relatively empty space, artifacts from the background reverberation in the room can be inherited by your voice clone.

Maintain a natural speaking pace and tone throughout the entire sample recording–it might be helpful to create a brief script in advance and read it out loud.

The quality of your audio input device matters–recoring on your default laptop hardware often produces a much lower-quality sample than recording on a high-quality external microphone.